Will AI Earn You Trust? A CEO’s Guide to Building Trust in Businesses!

The real infrastructure of AI is build on trust; without it, everything else breaks.

But can we trust what the machine decides? That’s the real question.

As we see in our daily life, AI is no longer sitting quietly in the background; it’s now the decision-maker for many businesses. But while AI’s influence has grown, our confidence in its decisions hasn’t caught up.

Who’s building it? What values guide it? Can we trust it? The list goes on…

“We can no longer separate business outcomes from ethical outcomes. Revenue drives growth, but trust sustains it.”- Suraj Arukil

Trust starts at the top. That’s on us, the CEOs.

According to a recent KPMG survey, three in five executives (61 percent) are skeptical about trusting AI systems.

And this is where CEOs come in, not just as adopters of AI, it’s more about earning belief.

Trust in AI Isn’t the Same as Trust in Traditional Technologies

Traditionally, when we talked about trust in technology, we meant things like uptime, performance, reliability, and whether the system worked the way we expected.

But with AI, that definition doesn’t hold for long. We’re now dealing with systems that learn, evolve, and make decisions in ways even their creators don’t always fully understand.

So, naturally, the trust bar gets raised.

Now, trust means:

- Is the AI aligned with our values?

- Is it fair and explainable?

- Is it being used with intention and accountability?

Trust in AI is about ethical alignment, transparency, and impact. And yes, that’s harder. But also more important than ever.

The Cost of Losing Trust and the Advantage of Earning It

When trust breaks, the damage is quicker and disastrous than we think.

Reputation takes a hit. Customers churn. Even your own teams lose confidence in the tech they’re supposed to champion.

Take the case of Apple Siri, which got fined millions for recording personal data via Siri without their consent (Source). The issue wasn’t just legal, it was about broken expectations and damaged credibility.

Think about it. The more advanced your AI, the more likely it’s doing complex, opaque things. Which means the harder it becomes to explain… and trust.

But if you get trust right, you don’t just avoid disaster. You win:

- You attract better talent (because smart people want to work on responsible things)

- You build long-term customer loyalty

- You get society’s “green light” to innovate

- You set the standards, instead of catching up to them

So, What Breaks Trust in AI?

We’ve seen the pitfalls before, and they’re becoming more visible every day:

Biased Algorithms Make Unfair Decisions

When algorithms are trained on biased data, they inherit and amplify those biases. This can lead to decisions that can unfairly impact certain groups.

Customer Data Used Without Consent

Data privacy often takes a backseat in the AI race. When companies use customer data without clear consent, they risk breaching trust and compliance.

Deepfakes and Misinformation Are Spreading

With generative AI advancing rapidly, deepfakes and synthetic content are becoming more realistic and more dangerous. From fake news to identity theft, misinformation can quickly spiral out of control.

These aren’t just technical flaws, they’re ethical breakdowns that damage relationships and stall progress.

The CEO’s Role in Building Trust

Let’s be clear: you can’t delegate trust.

If you’re a CEO thinking, “We’ve got a great tech team, they’ll handle the trust part,” hate to break it to you, but no.

What does that look like?

Champion Ethical AI from the Top

AI ethics is a leadership responsibility. It trickles down into every model, feature, and line of code. Leaders set the tone: are we building something that just works, or something that works for good? Start by putting integrity on your AI roadmap, right next to innovation.

Make Trust a Board-Level Conversation

When AI is shaping decisions, predicting behaviors, and automating outcomes, trust is no longer a soft metric, It is critical to your business. Boards should treat AI risks with the same rigor as financial risk. If your AI can’t be explained at the top, it’s not ready for the real world.

Foster Cross-Functional Governance

AI built in a vacuum? That’s a recipe for disaster. Good governance means getting Legal, HR, Engineering, and Marketing in the same room, early and often. Everyone brings something to the table. It’s time they had a seat at it.

Be Radically Transparent

No more black boxes and smoke screens. If your AI struggles in certain scenarios, just say it. If it’s learning, own it. People respect the real over the perfect. In a world flooded with AI buzzwords, clarity is your brand’s best flex.

Promote Human-AI Collaboration, Not Replacement

AI should elevate people, not edge them out. Let’s stop talking about replacing jobs and start talking about replacing drudgery. When humans and machines collaborate, magic happens, faster workflows, smarter decisions, and a whole lot less burnout. Empower your people with AI, and they’ll empower your business in return.

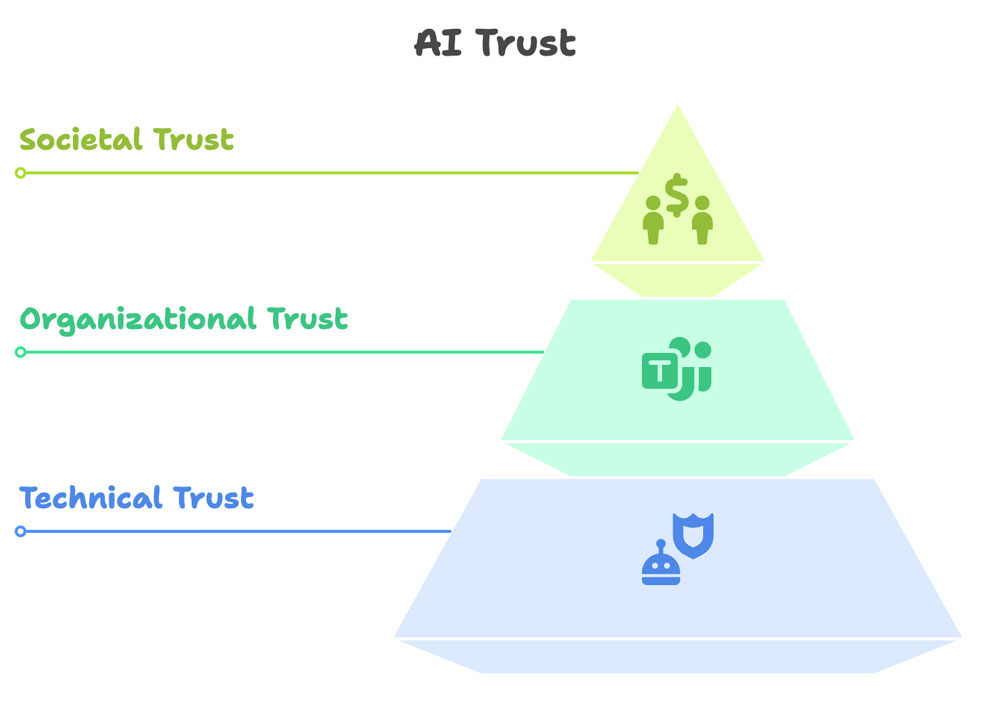

Three Layers of Trustworthy AI

At Nuvento, we think of AI trust in three key layers:

- Technical Trust

Is the system robust, accurate, explainable?

- Organizational Trust

Are teams aligned on how AI is built and used? Is there governance in place?

- Societal Trust

Is the AI aligned with public values? Does it promote fairness and inclusion?

A CEO Framework for Leading with Trust

Here’s how I believe we scale AI trust across the business:

1. Bring More Transparency about the Product

Explainability must be part of the product DNA. We make sure our customers and teams understand what our AI is doing and why.

2. Governance Should be Proactive Here

We’ve built internal review processes and ethical checkpoints into our AI lifecycle. It’s not always perfect, but we must improve it.

3. Develop Human-Centric Designs

We design for real people, including those at the margins. Because inclusion at the design stage means equity in outcomes.

4. Partnership with Policy and Regulation

We don’t fear regulation. We engage with it. Responsible frameworks make it easier for innovation to earn public trust.

What We’re Doing at Nuvento?

At Nuvento, trust is baked into how we build our solutions.

We’ve set up an internal advisory council to review high-impact deployments. It brings together product, legal, engineering, and HR to evaluate risks and ensure alignment with our principles.

We’ve also launched AI literacy programs across teams, because you can’t expect people to trust what they don’t understand.

We’re still learning. We’ve made mistakes, iterated, and improved. And that’s part of the journey.

Trust Is a Leadership Imperative

Here’s what I’ve come to believe: If we want to lead in the age of intelligent systems, we must take ownership of how those systems behave, how they’re perceived, and what values they reflect.

Let me sum it up with three pointers:

- Setting proper boundaries

- Being transparent about what you do

- Actively listening to stakeholders

Because in a world increasingly run by intelligent agents, the most powerful and human trait a leader can model… is trust.

Let’s Talk.

Want to know how we’re weaving trust into our AI practice at Nuvento?

Let’s connect 1:1.

Because the future of AI isn’t just about what it can do, it’s about what we choose to do with it.